Self-supervised vision-language pretraining for Medical visual question answering

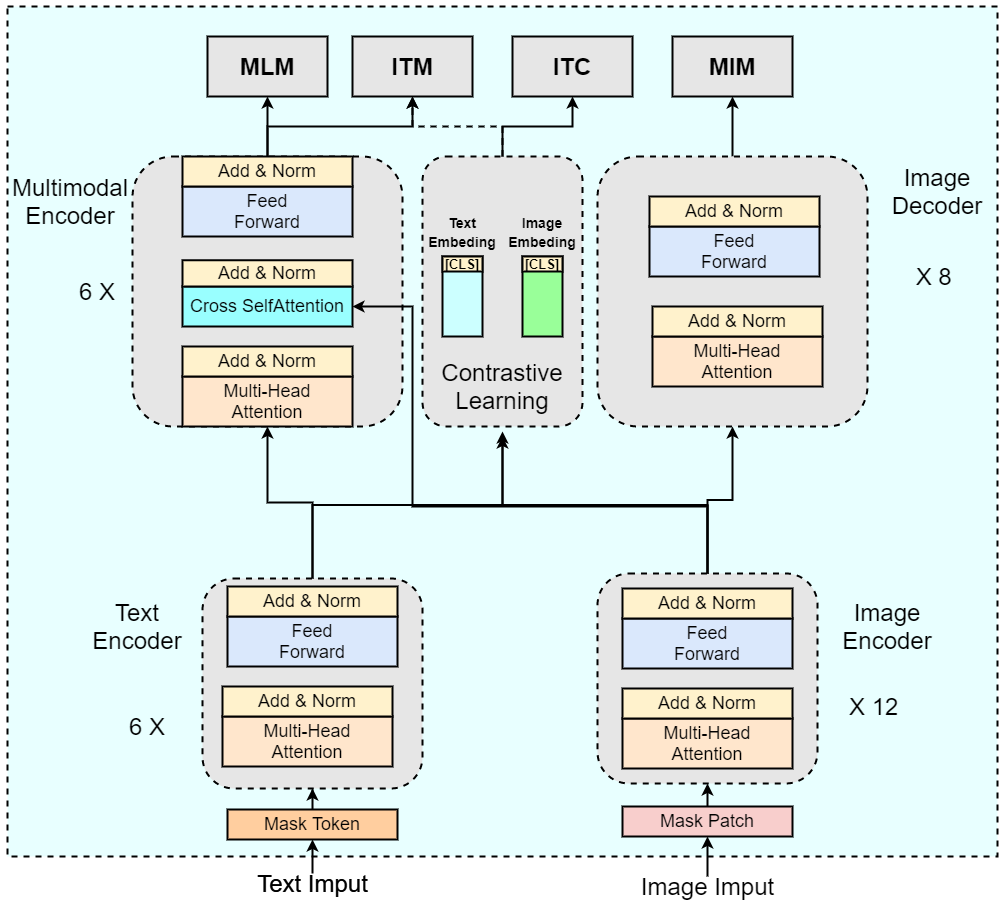

Medical image visual question answering (VQA) is a task to answer clinical questions, given a radiographic image, which is a challenging problem that requires a model to integrate both vision and language information. To solve medical VQA problems with a limited number of training data, pretrain-finetune paradigm is widely used to improve the model generalization. In this paper, we propose a self-supervised method that applies Masked image modeling, Masked language modeling, Image text matching and Image text alignment via contrastive learning (M2I2) for pretraining on medical image caption dataset, and finetunes to downstream medical VQA tasks. The proposed method achieves state-of-the-art performance on all the three public medical VQA datasets. Our codes and models are available at https://github.com/pengfeiliHEU/M2I2.

PDF AbstractCode

Datasets

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Medical Visual Question Answering | PathVQA | M2I2 | Free-form Accuracy | 36.3 | # 3 | ||

| Yes/No Accuracy | 88.0 | # 2 | |||||

| Overall Accuracy | 62.2 | # 3 | |||||

| Medical Visual Question Answering | SLAKE-English | M2I2 | Overall Accuracy | 81.2 | # 5 | ||

| Close-ended Accuracy | 91.1 | # 1 | |||||

| Open-ended Accuracy | 74.7 | # 5 | |||||

| Medical Visual Question Answering | VQA-RAD | M2I2 | Close-ended Accuracy | 83.5 | # 6 | ||

| Open-ended Accuracy | 66.5 | # 6 | |||||

| Overall Accuracy | 76.8 | # 5 |

VQA-RAD

VQA-RAD

SLAKE

SLAKE

PathVQA

PathVQA

SLAKE-English

SLAKE-English