Scaling up Trustless DNN Inference with Zero-Knowledge Proofs

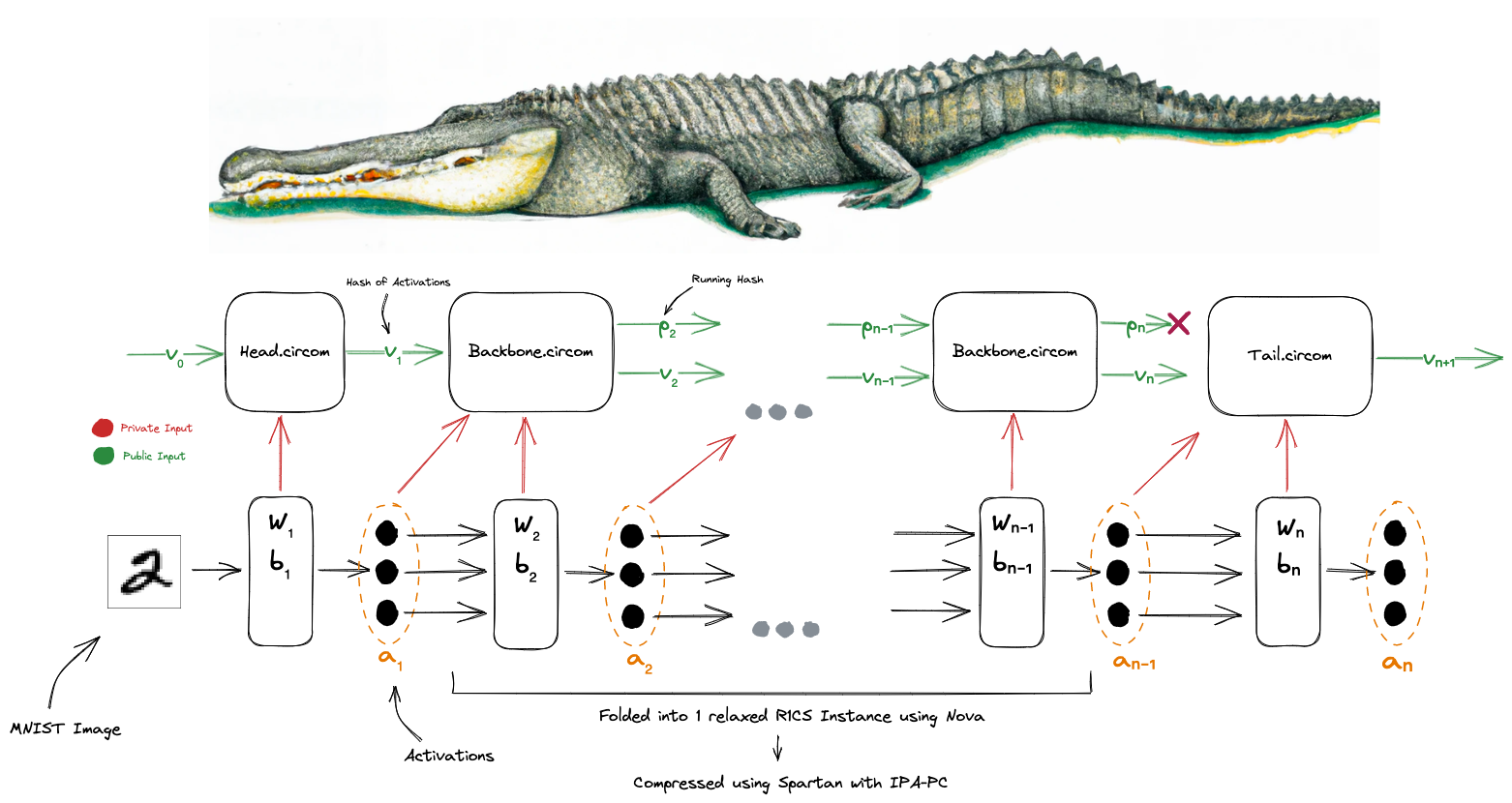

As ML models have increased in capabilities and accuracy, so has the complexity of their deployments. Increasingly, ML model consumers are turning to service providers to serve the ML models in the ML-as-a-service (MLaaS) paradigm. As MLaaS proliferates, a critical requirement emerges: how can model consumers verify that the correct predictions were served, in the face of malicious, lazy, or buggy service providers? In this work, we present the first practical ImageNet-scale method to verify ML model inference non-interactively, i.e., after the inference has been done. To do so, we leverage recent developments in ZK-SNARKs (zero-knowledge succinct non-interactive argument of knowledge), a form of zero-knowledge proofs. ZK-SNARKs allows us to verify ML model execution non-interactively and with only standard cryptographic hardness assumptions. In particular, we provide the first ZK-SNARK proof of valid inference for a full resolution ImageNet model, achieving 79\% top-5 accuracy. We further use these ZK-SNARKs to design protocols to verify ML model execution in a variety of scenarios, including for verifying MLaaS predictions, verifying MLaaS model accuracy, and using ML models for trustless retrieval. Together, our results show that ZK-SNARKs have the promise to make verified ML model inference practical.

PDF Abstract