RSUD20K: A Dataset for Road Scene Understanding In Autonomous Driving

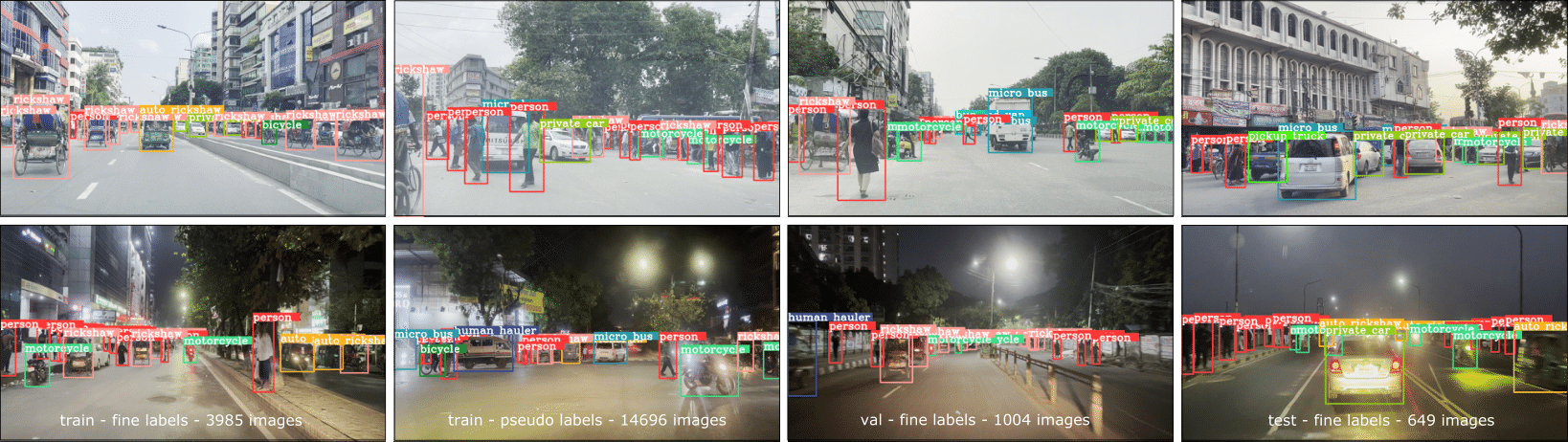

Road scene understanding is crucial in autonomous driving, enabling machines to perceive the visual environment. However, recent object detectors tailored for learning on datasets collected from certain geographical locations struggle to generalize across different locations. In this paper, we present RSUD20K, a new dataset for road scene understanding, comprised of over 20K high-resolution images from the driving perspective on Bangladesh roads, and includes 130K bounding box annotations for 13 objects. This challenging dataset encompasses diverse road scenes, narrow streets and highways, featuring objects from different viewpoints and scenes from crowded environments with densely cluttered objects and various weather conditions. Our work significantly improves upon previous efforts, providing detailed annotations and increased object complexity. We thoroughly examine the dataset, benchmarking various state-of-the-art object detectors and exploring large vision models as image annotators.

PDF Abstract