NESS: Node Embeddings from Static SubGraphs

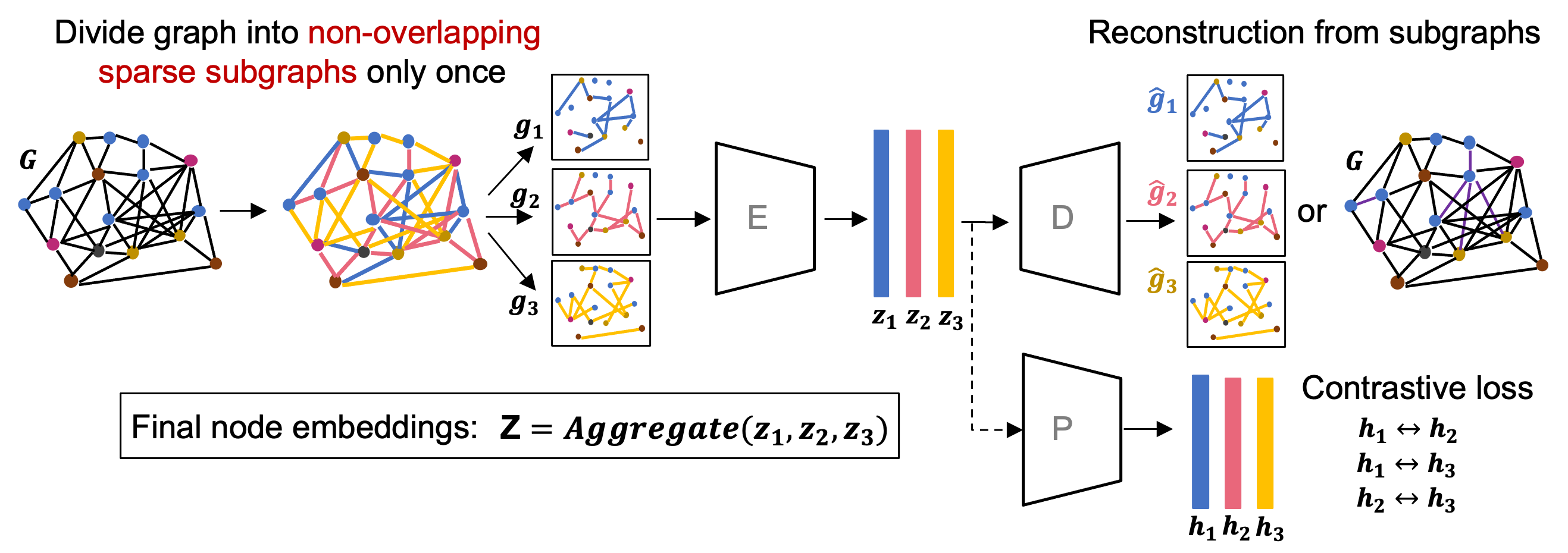

We present a framework for learning Node Embeddings from Static Subgraphs (NESS) using a graph autoencoder (GAE) in a transductive setting. NESS is based on two key ideas: i) Partitioning the training graph to multiple static, sparse subgraphs with non-overlapping edges using random edge split during data pre-processing, ii) Aggregating the node representations learned from each subgraph to obtain a joint representation of the graph at test time. Moreover, we propose an optional contrastive learning approach in transductive setting. We demonstrate that NESS gives a better node representation for link prediction tasks compared to current autoencoding methods that use either the whole graph or stochastic subgraphs. Our experiments also show that NESS improves the performance of a wide range of graph encoders and achieves state-of-the-art results for link prediction on multiple real-world datasets with edge homophily ratio ranging from strong heterophily to strong homophily.

PDF Abstract

Cora

Cora