Invisible Marker: Automatic Annotation of Segmentation Masks for Object Manipulation

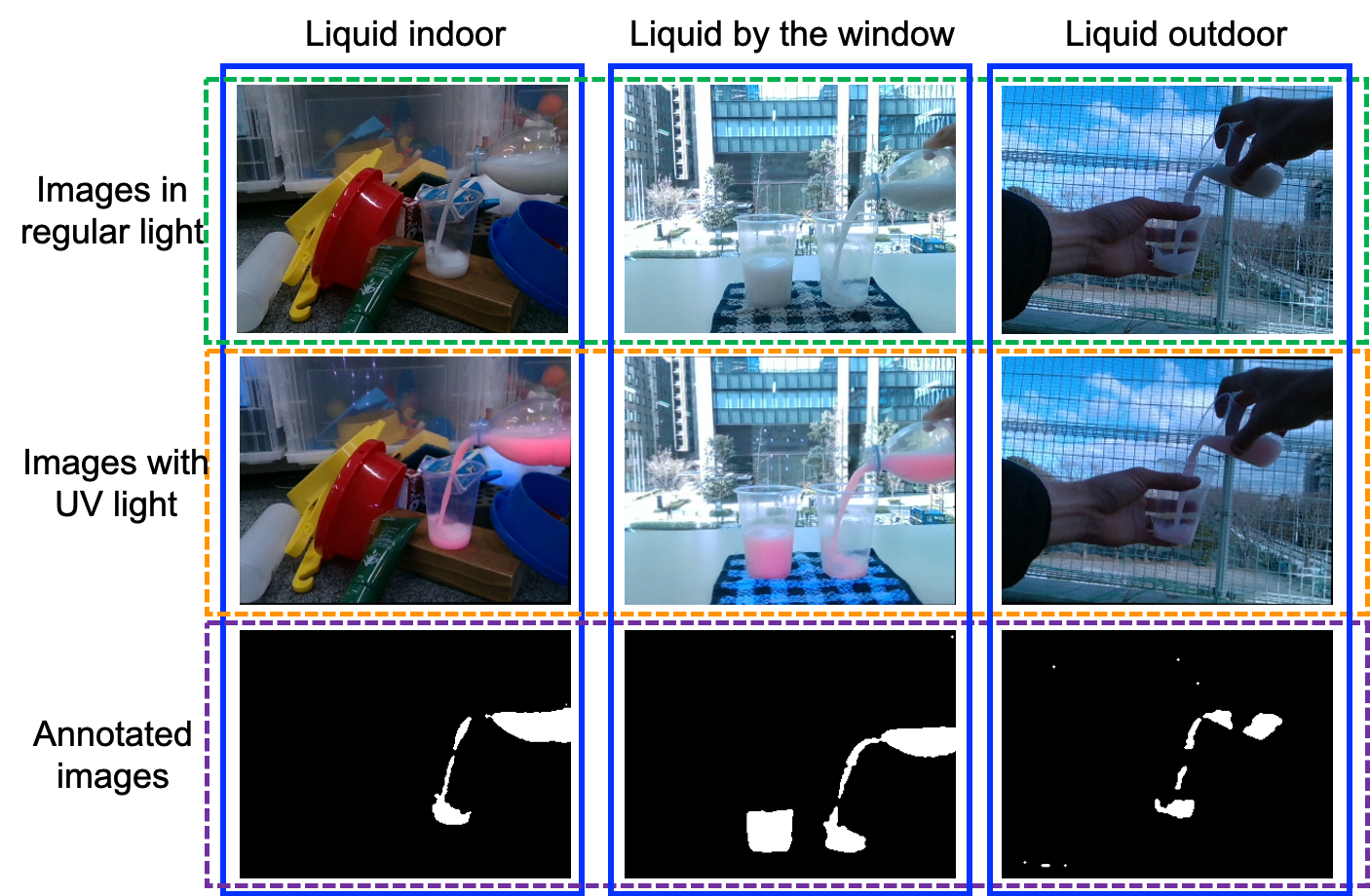

We propose a method to annotate segmentation masks accurately and automatically using invisible marker for object manipulation. Invisible marker is invisible under visible (regular) light conditions, but becomes visible under invisible light, such as ultraviolet (UV) light. By painting objects with the invisible marker, and by capturing images while alternately switching between regular and UV light at high speed, massive annotated datasets are created quickly and inexpensively. We show a comparison between our proposed method and manual annotations. We demonstrate semantic segmentation for deformable objects including clothes, liquids, and powders under controlled environmental light conditions. In addition, we show demonstrations of liquid pouring tasks under uncontrolled environmental light conditions in complex environments such as inside the office, house, and outdoors. Furthermore, it is possible to capture data while the camera is in motion so it becomes easier to capture large datasets, as shown in our demonstration.

PDF Abstract