From system models to class models: An in-context learning paradigm

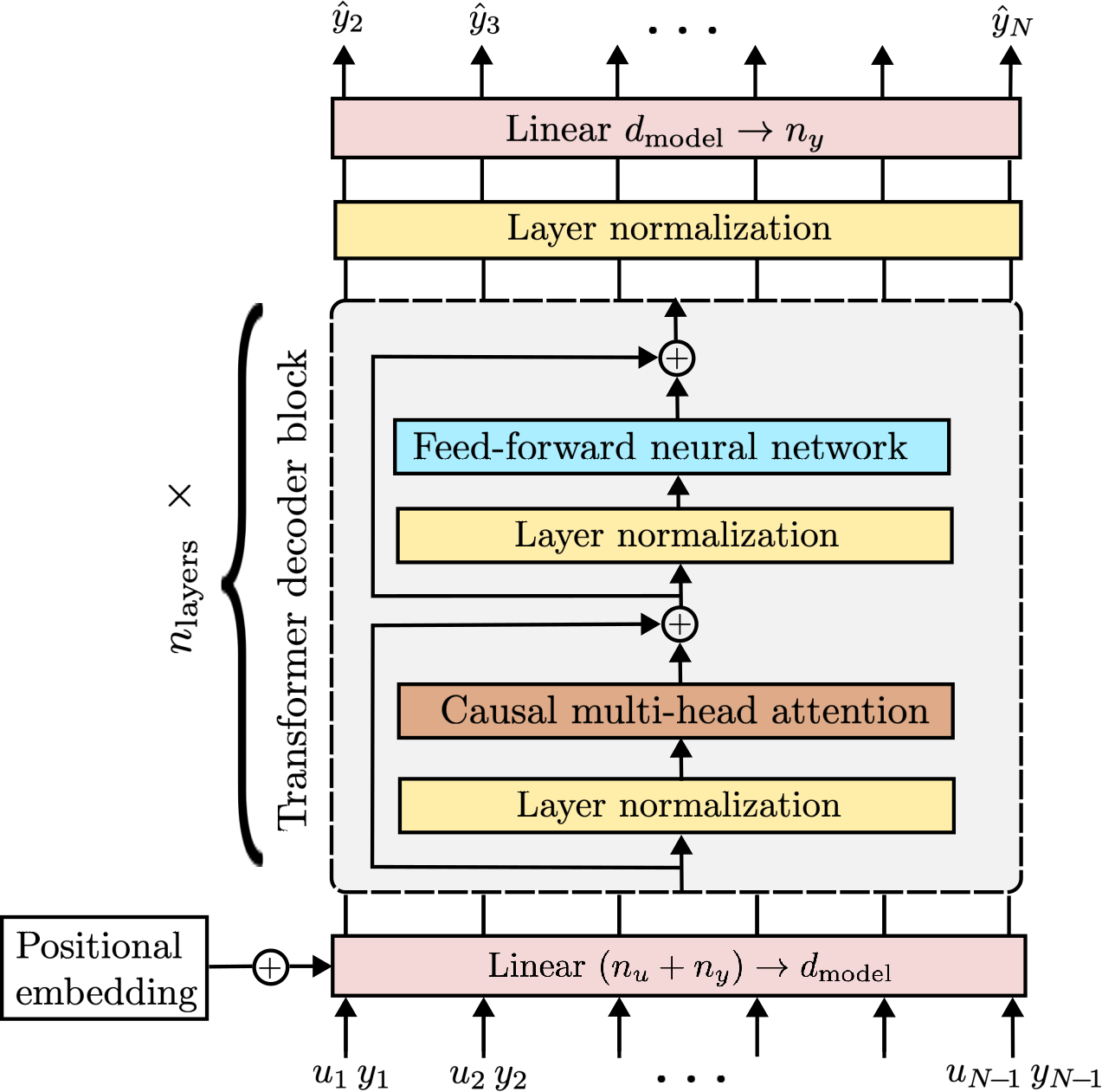

Is it possible to understand the intricacies of a dynamical system not solely from its input/output pattern, but also by observing the behavior of other systems within the same class? This central question drives the study presented in this paper. In response to this query, we introduce a novel paradigm for system identification, addressing two primary tasks: one-step-ahead prediction and multi-step simulation. Unlike conventional methods, we do not directly estimate a model for the specific system. Instead, we learn a meta model that represents a class of dynamical systems. This meta model is trained on a potentially infinite stream of synthetic data, generated by simulators whose settings are randomly extracted from a probability distribution. When provided with a context from a new system-specifically, an input/output sequence-the meta model implicitly discerns its dynamics, enabling predictions of its behavior. The proposed approach harnesses the power of Transformers, renowned for their \emph{in-context learning} capabilities. For one-step prediction, a GPT-like decoder-only architecture is utilized, whereas the simulation problem employs an encoder-decoder structure. Initial experimental results affirmatively answer our foundational question, opening doors to fresh research avenues in system identification.

PDF Abstract