HeteFedRec: Federated Recommender Systems with Model Heterogeneity

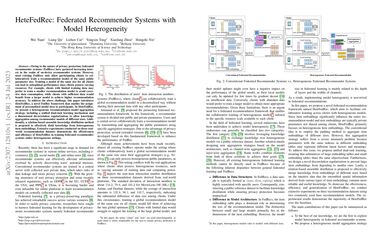

Owing to the nature of privacy protection, federated recommender systems (FedRecs) have garnered increasing interest in the realm of on-device recommender systems. However, most existing FedRecs only allow participating clients to collaboratively train a recommendation model of the same public parameter size. Training a model of the same size for all clients can lead to suboptimal performance since clients possess varying resources. For example, clients with limited training data may prefer to train a smaller recommendation model to avoid excessive data consumption, while clients with sufficient data would benefit from a larger model to achieve higher recommendation accuracy. To address the above challenge, this paper introduces HeteFedRec, a novel FedRec framework that enables the assignment of personalized model sizes to participants. In HeteFedRec, we present a heterogeneous recommendation model aggregation strategy, including a unified dual-task learning mechanism and a dimensional decorrelation regularization, to allow knowledge aggregation among recommender models of different sizes. Additionally, a relation-based ensemble knowledge distillation method is proposed to effectively distil knowledge from heterogeneous item embeddings. Extensive experiments conducted on three real-world recommendation datasets demonstrate the effectiveness and efficiency of HeteFedRec in training federated recommender systems under heterogeneous settings.

PDF Abstract

MovieLens

MovieLens