Entity Tracking via Effective Use of Multi-Task Learning Model and Mention-guided Decoding

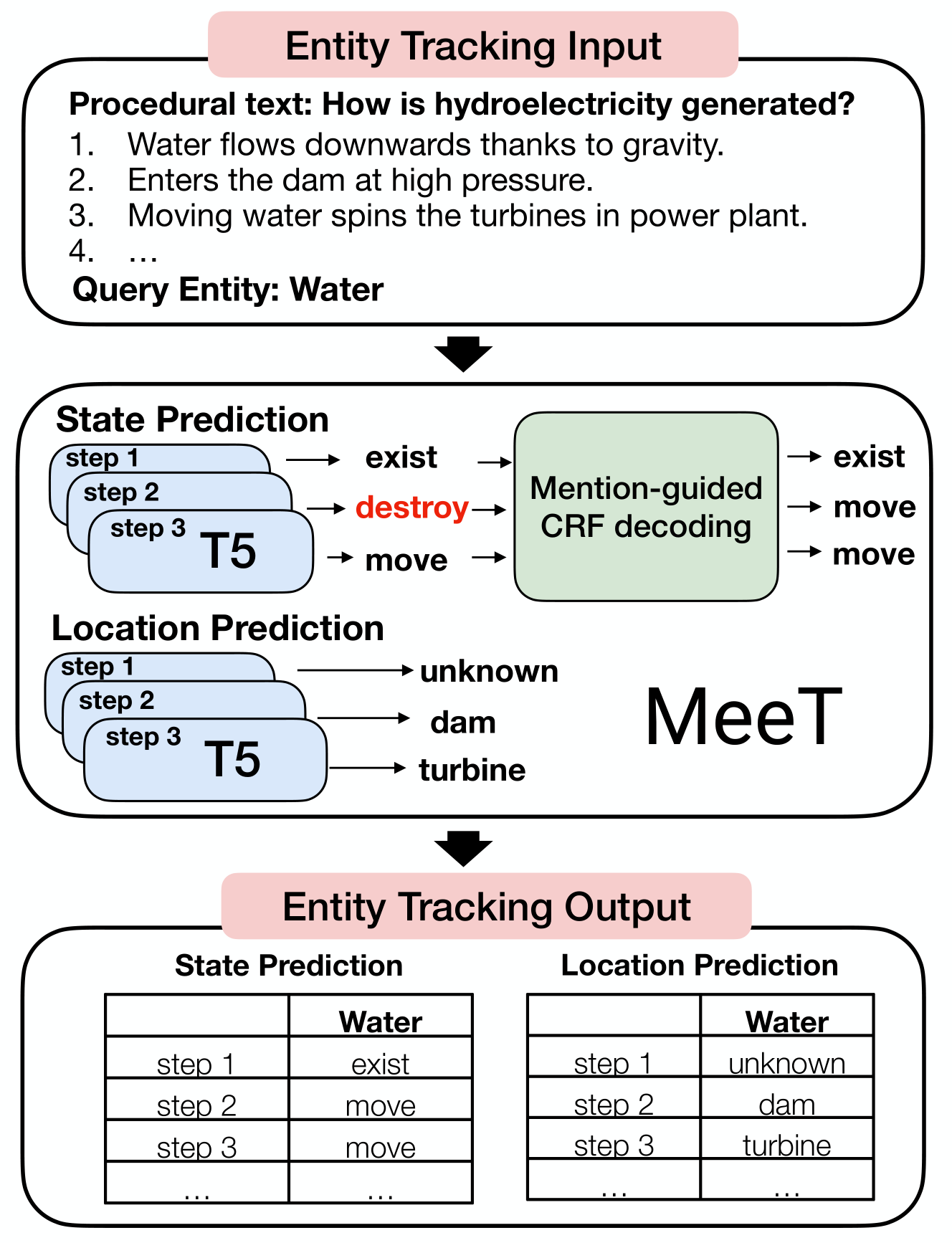

Cross-task knowledge transfer via multi-task learning has recently made remarkable progress in general NLP tasks. However, entity tracking on the procedural text has not benefited from such knowledge transfer because of its distinct formulation, i.e., tracking the event flow while following structural constraints. State-of-the-art entity tracking approaches either design complicated model architectures or rely on task-specific pre-training to achieve good results. To this end, we propose MeeT, a Multi-task learning-enabled entity Tracking approach, which utilizes knowledge gained from general domain tasks to improve entity tracking. Specifically, MeeT first fine-tunes T5, a pre-trained multi-task learning model, with entity tracking-specialized QA formats, and then employs our customized decoding strategy to satisfy the structural constraints. MeeT achieves state-of-the-art performances on two popular entity tracking datasets, even though it does not require any task-specific architecture design or pre-training.

PDF Abstract

ProPara

ProPara