Structured Knowledge Grounding for Question Answering

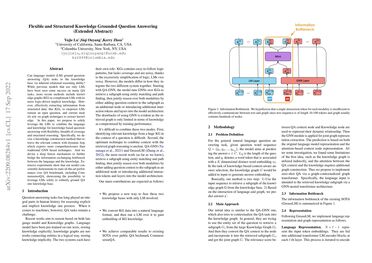

Can language models (LM) ground question-answering (QA) tasks in the knowledge base via inherent relational reasoning ability? While previous models that use only LMs have seen some success on many QA tasks, more recent methods include knowledge graphs (KG) to complement LMs with their more logic-driven implicit knowledge. However, effectively extracting information from structured data, like KGs, empowers LMs to remain an open question, and current models rely on graph techniques to extract knowledge. In this paper, we propose to solely leverage the LMs to combine the language and knowledge for knowledge based question-answering with flexibility, breadth of coverage and structured reasoning. Specifically, we devise a knowledge construction method that retrieves the relevant context with a dynamic hop, which expresses more comprehensivenes than traditional GNN-based techniques. And we devise a deep fusion mechanism to further bridge the information exchanging bottleneck between the language and the knowledge. Extensive experiments show that our model consistently demonstrates its state-of-the-art performance over CommensenseQA benchmark, showcasing the possibility to leverage LMs solely to robustly ground QA into the knowledge base.

PDF Abstract

OpenBookQA

OpenBookQA

CommonsenseQA

CommonsenseQA