Flamingo: a Visual Language Model for Few-Shot Learning

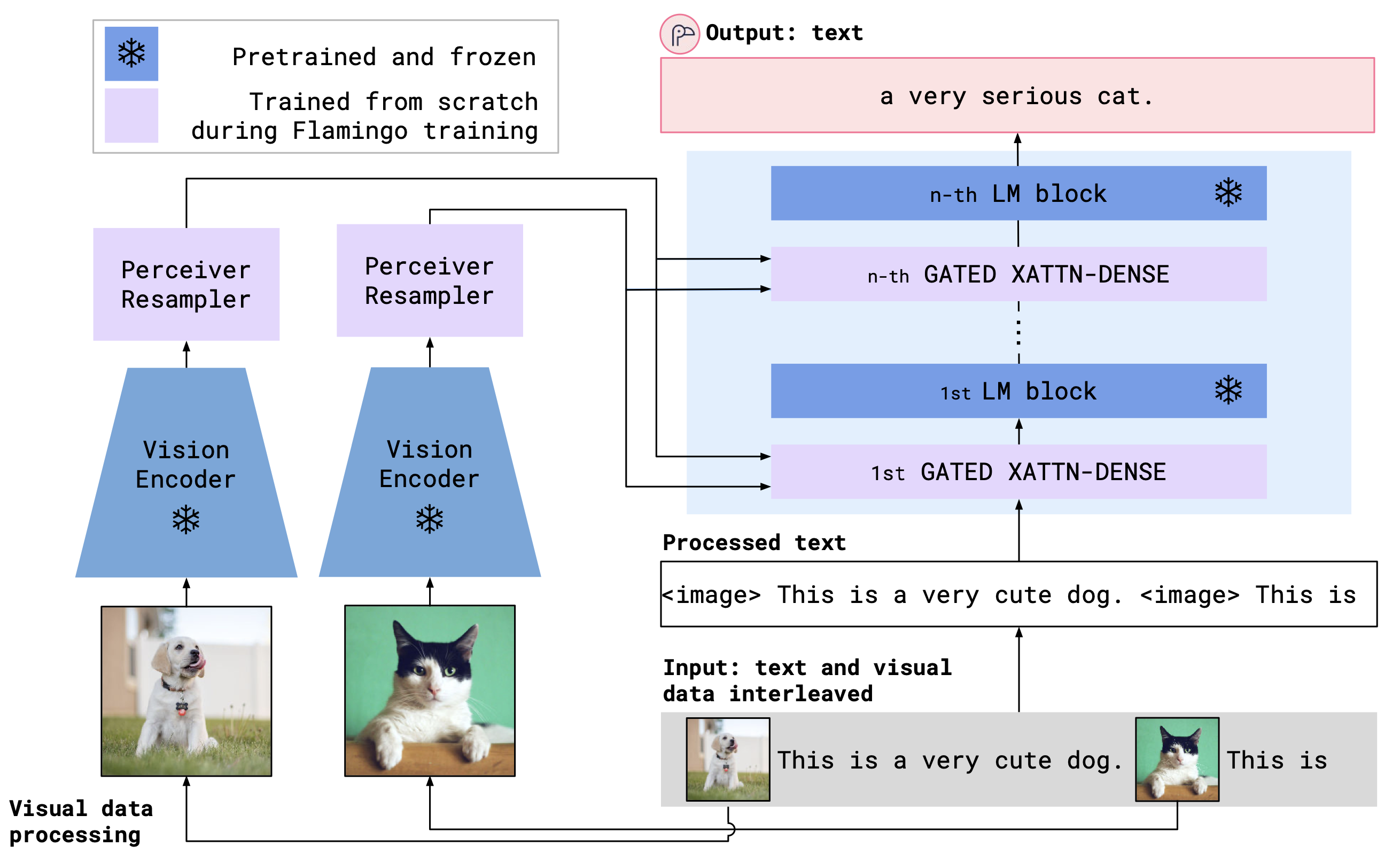

Building models that can be rapidly adapted to novel tasks using only a handful of annotated examples is an open challenge for multimodal machine learning research. We introduce Flamingo, a family of Visual Language Models (VLM) with this ability. We propose key architectural innovations to: (i) bridge powerful pretrained vision-only and language-only models, (ii) handle sequences of arbitrarily interleaved visual and textual data, and (iii) seamlessly ingest images or videos as inputs. Thanks to their flexibility, Flamingo models can be trained on large-scale multimodal web corpora containing arbitrarily interleaved text and images, which is key to endow them with in-context few-shot learning capabilities. We perform a thorough evaluation of our models, exploring and measuring their ability to rapidly adapt to a variety of image and video tasks. These include open-ended tasks such as visual question-answering, where the model is prompted with a question which it has to answer; captioning tasks, which evaluate the ability to describe a scene or an event; and close-ended tasks such as multiple-choice visual question-answering. For tasks lying anywhere on this spectrum, a single Flamingo model can achieve a new state of the art with few-shot learning, simply by prompting the model with task-specific examples. On numerous benchmarks, Flamingo outperforms models fine-tuned on thousands of times more task-specific data.

PDF Abstract DeepMind 2022 PDFCode

Tasks

Few-Shot Learning

Few-Shot Learning

Generative Visual Question Answering

Generative Visual Question Answering

Language Modelling

Language Modelling

Medical Visual Question Answering

Medical Visual Question Answering

Multiple-choice

Multiple-choice

Question Answering

Question Answering

Temporal/Casual QA

Temporal/Casual QA

Video Question Answering

Video Question Answering

Video Understanding

Video Understanding

Visual Question Answering

Visual Question Answering

Visual Question Answering (VQA)

Visual Question Answering (VQA)

Zero-Shot Cross-Modal Retrieval

Zero-Shot Cross-Modal Retrieval

Zero-Shot Learning

Zero-Shot Learning

Zero-Shot Video Question Answer

Zero-Shot Video Question Answer

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Zero-Shot Cross-Modal Retrieval | COCO 2014 | Flamingo | Image-to-text R@1 | 65.9 | # 9 | ||

| Image-to-text R@5 | 87.3 | # 8 | |||||

| Image-to-text R@10 | 92.9 | # 7 | |||||

| Text-to-image R@1 | 48.0 | # 9 | |||||

| Text-to-image R@5 | 73.3 | # 9 | |||||

| Text-to-image R@10 | 82.1 | # 8 | |||||

| Zero-Shot Cross-Modal Retrieval | Flickr30k | Flamingo | Image-to-text R@1 | 89.3 | # 10 | ||

| Image-to-text R@5 | 98.8 | # 11 | |||||

| Image-to-text R@10 | 99.7 | # 7 | |||||

| Text-to-image R@1 | 79.5 | # 8 | |||||

| Text-to-image R@5 | 95.3 | # 7 | |||||

| Text-to-image R@10 | 97.9 | # 5 | |||||

| Meme Classification | Hateful Memes | Flamingo (few-shot:32) | ROC-AUC | 0.700 | # 8 | ||

| Visual Question Answering (VQA) | MSRVTT-QA | Flamingo (32-shot) | Accuracy | 0.310 | # 29 | ||

| Visual Question Answering (VQA) | MSRVTT-QA | Flamingo (0-shot) | Accuracy | 0.174 | # 31 | ||

| Visual Question Answering (VQA) | MSRVTT-QA | Flamingo | Accuracy | 0.474 | # 5 | ||

| Temporal/Casual QA | NExT-QA | Flamingo(32-shot) | WUPS | 33.5 | # 4 | ||

| Temporal/Casual QA | NExT-QA | Flamingo(0-shot) | WUPS | 26.7 | # 7 | ||

| Visual Question Answering (VQA) | OK-VQA | Flamingo3B | Accuracy | 41.2 | # 26 | ||

| Visual Question Answering (VQA) | OK-VQA | Flamingo80B | Accuracy | 50.6 | # 18 | ||

| Visual Question Answering (VQA) | OK-VQA | Flamingo9B | Accuracy | 44.7 | # 23 | ||

| Medical Visual Question Answering | PMC-VQA | Open-Flamingo | Accuracy | 26.4 | # 2 | ||

| Visual Question Answering (VQA) | PMC-VQA | Open-Flamingo | Accuracy | 26.4 | # 2 | ||

| Generative Visual Question Answering | PMC-VQA | Open-Flamingo | BLEU-1 | 4.1 | # 3 | ||

| Action Recognition | RareAct | 🦩 Flamingo | mWAP | 60.8 | # 1 | ||

| Video Question Answering | STAR Benchmark | Flamingo-80B (4-shot) | Average Accuracy | 42.4 | # 11 | ||

| Zero-Shot Video Question Answer | STAR Benchmark | Flamingo-9B | Accuracy | 39.7 | # 5 | ||

| Accuracy | 41.8 | # 2 | |||||

| Zero-Shot Video Question Answer | STAR Benchmark | Flamingo-80B | Accuracy | 39.7 | # 5 | ||

| Video Question Answering | STAR Benchmark | Flamingo-9B (4-shot) | Average Accuracy | 42.8 | # 10 | ||

| Video Question Answering | STAR Benchmark | Flamingo-80B (0-shot) | Average Accuracy | 39.7 | # 13 | ||

| Video Question Answering | STAR Benchmark | Flamingo-9B (0-shot) | Average Accuracy | 41.8 | # 12 | ||

| Visual Question Answering (VQA) | VQA v2 test-dev | Flamingo 3B | Accuracy | 49.2 | # 55 | ||

| Visual Question Answering (VQA) | VQA v2 test-dev | Flamingo 9B | Accuracy | 51.8 | # 52 | ||

| Visual Question Answering (VQA) | VQA v2 test-dev | Flamingo 80B | Accuracy | 56.3 | # 49 |

MS COCO

MS COCO

Flickr30k

Flickr30k

Visual Question Answering v2.0

Visual Question Answering v2.0

OK-VQA

OK-VQA

TextVQA

TextVQA

YouCook2

YouCook2

VisDial

VisDial

Hateful Memes

Hateful Memes

VizWiz

VizWiz

VATEX

VATEX

NExT-QA

NExT-QA

iVQA

iVQA

STAR Benchmark

STAR Benchmark

RareAct

RareAct