Fine-Grained Re-Identification

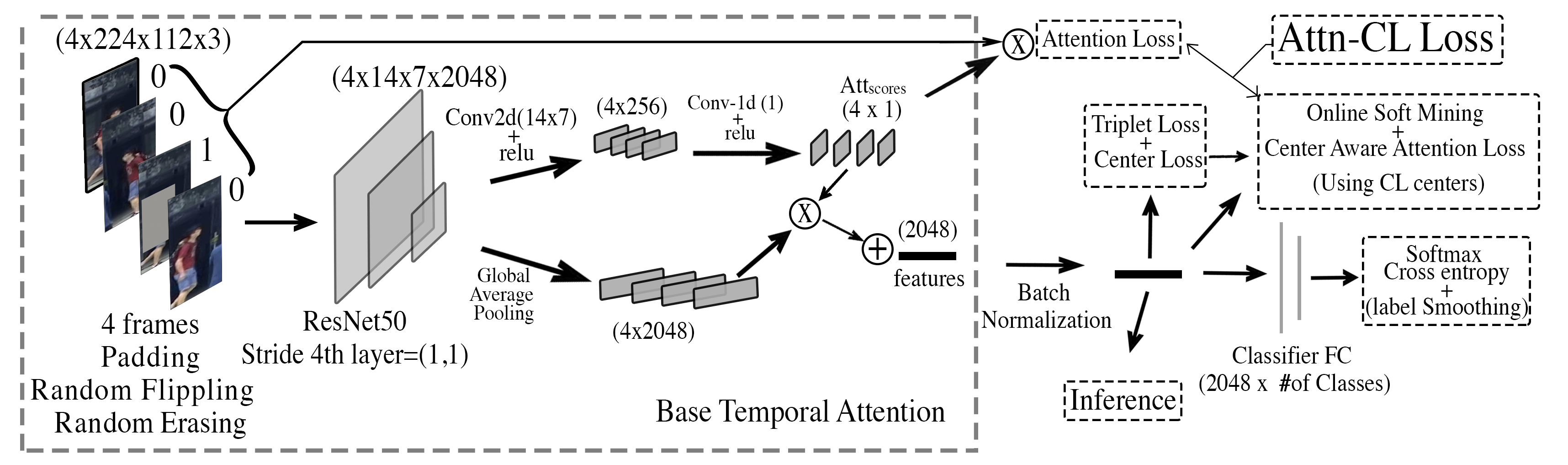

Research into the task of re-identification (ReID) is picking up momentum in computer vision for its many use cases and zero-shot learning nature. This paper proposes a computationally efficient fine-grained ReID model, FGReID, which is among the first models to unify image and video ReID while keeping the number of training parameters minimal. FGReID takes advantage of video-based pre-training and spatial feature attention to improve performance on both video and image ReID tasks. FGReID achieves state-of-the-art (SOTA) on MARS, iLIDS-VID, and PRID-2011 video person ReID benchmarks. Eliminating temporal pooling yields an image ReID model that surpasses SOTA on CUHK01 and Market1501 image person ReID benchmarks. The FGReID achieves near SOTA performance on the vehicle ReID dataset VeRi as well, demonstrating its ability to generalize. Additionally we do an ablation study analyzing the key features influencing model performance on ReID tasks. Finally, we discuss the moral dilemmas related to ReID tasks, including the potential for misuse. Code for this work is publicly available at https: //github.com/ppriyank/Fine-grained-ReIdentification.

PDF Abstract

Market-1501

Market-1501

MARS

MARS

iLIDS-VID

iLIDS-VID