Domain and View-point Agnostic Hand Action Recognition

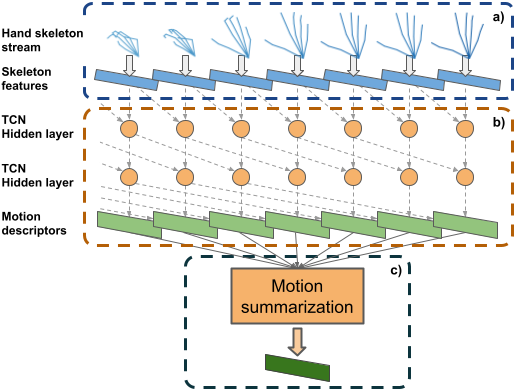

Hand action recognition is a special case of action recognition with applications in human-robot interaction, virtual reality or life-logging systems. Building action classifiers able to work for such heterogeneous action domains is very challenging. There are very subtle changes across different actions from a given application but also large variations across domains (e.g. virtual reality vs life-logging). This work introduces a novel skeleton-based hand motion representation model that tackles this problem. The framework we propose is agnostic to the application domain or camera recording view-point. When working on a single domain (intra-domain action classification) our approach performs better or similar to current state-of-the-art methods on well-known hand action recognition benchmarks. And, more importantly, when performing hand action recognition for action domains and camera perspectives which our approach has not been trained for (cross-domain action classification), our proposed framework achieves comparable performance to intra-domain state-of-the-art methods. These experiments show the robustness and generalization capabilities of our framework.

PDF Abstract

SHREC

SHREC

First-Person Hand Action Benchmark

First-Person Hand Action Benchmark