Automatic Evaluation of Excavator Operators using Learned Reward Functions

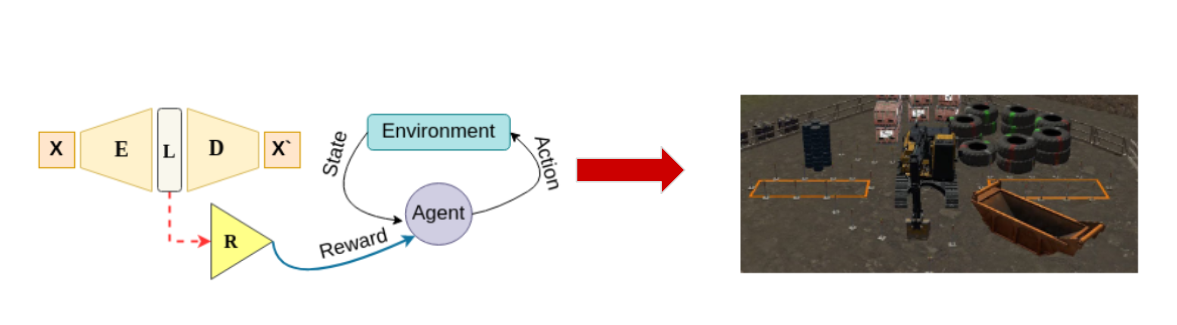

Training novice users to operate an excavator for learning different skills requires the presence of expert teachers. Considering the complexity of the problem, it is comparatively expensive to find skilled experts as the process is time-consuming and requires precise focus. Moreover, since humans tend to be biased, the evaluation process is noisy and will lead to high variance in the final score of different operators with similar skills. In this work, we address these issues and propose a novel strategy for the automatic evaluation of excavator operators. We take into account the internal dynamics of the excavator and the safety criterion at every time step to evaluate the performance. To further validate our approach, we use this score prediction model as a source of reward for a reinforcement learning agent to learn the task of maneuvering an excavator in a simulated environment that closely replicates the real-world dynamics. For a policy learned using these external reward prediction models, our results demonstrate safer solutions following the required dynamic constraints when compared to policy trained with task-based reward functions only, making it one step closer to real-life adoption. For future research, we release our codebase at https://github.com/pranavAL/InvRL_Auto-Evaluate and video results https://drive.google.com/file/d/1jR1otOAu8zrY8mkhUOUZW9jkBOAKK71Z/view?usp=share_link .

PDF Abstract