Inexact Newton Methods for Stochastic Non-Convex Optimization with Applications to Neural Network Training

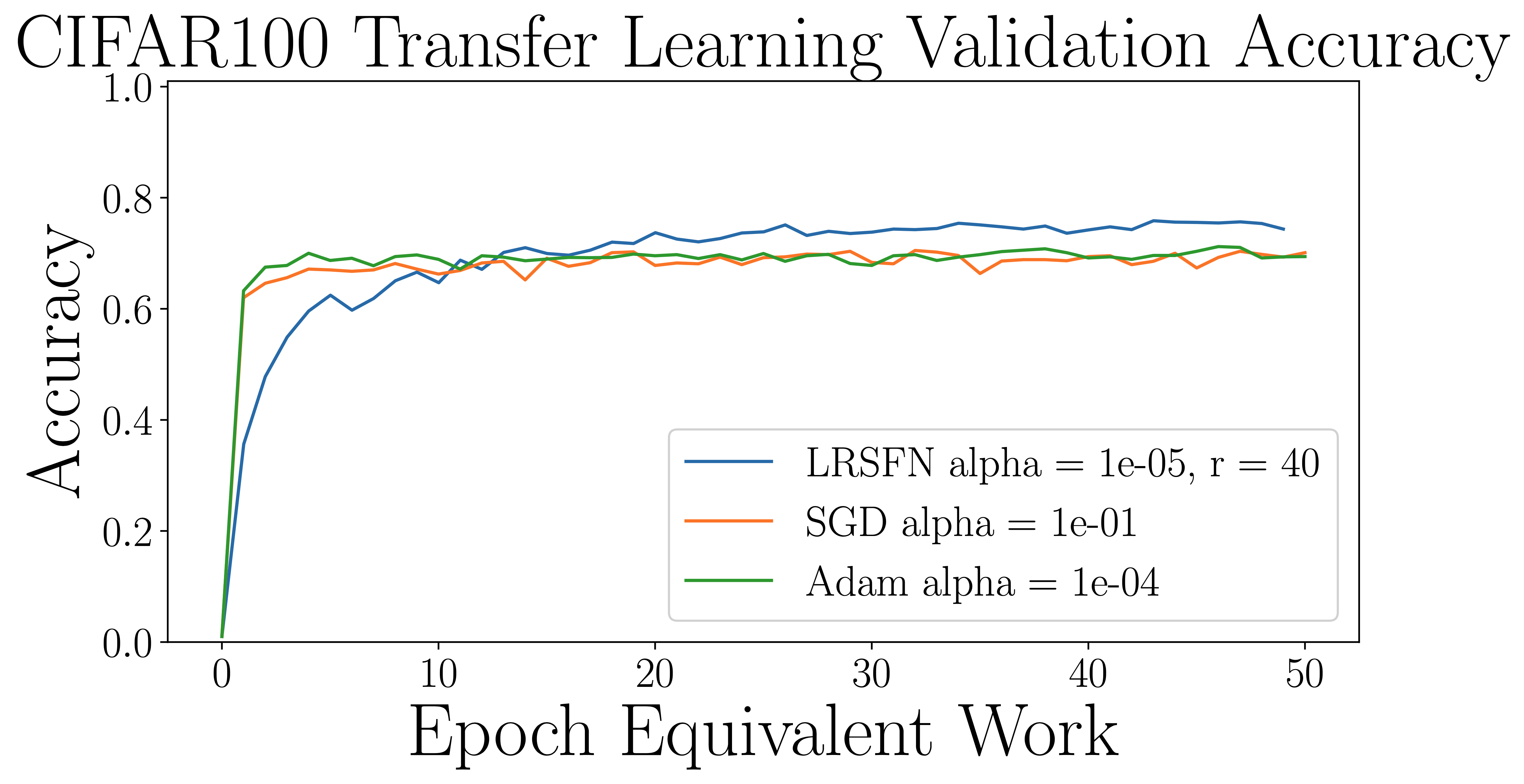

We survey sub-sampled inexact Newton methods and consider their application in non-convex settings. Under mild assumptions we derive convergence rates in expected value for sub-sampled low rank Newton methods, and sub-sampled inexact Newton Krylov methods. These convergence rates quantify the errors incurred in sub-sampling the Hessian and gradient, as well as in approximating the Newton linear solve, and in choosing regularization and step length parameters. We deploy these methods in training convolutional autoencoders for the MNIST, CIFAR10 and LFW data sets. These numerical results demonstrate that these sub-sampled inexact Newton methods are competitive with first order methods in computational cost, and can outperform first order methods with respect to generalization errors.

PDF Abstract