Benchmarking Neural Network Robustness to Common Corruptions and Surface Variations

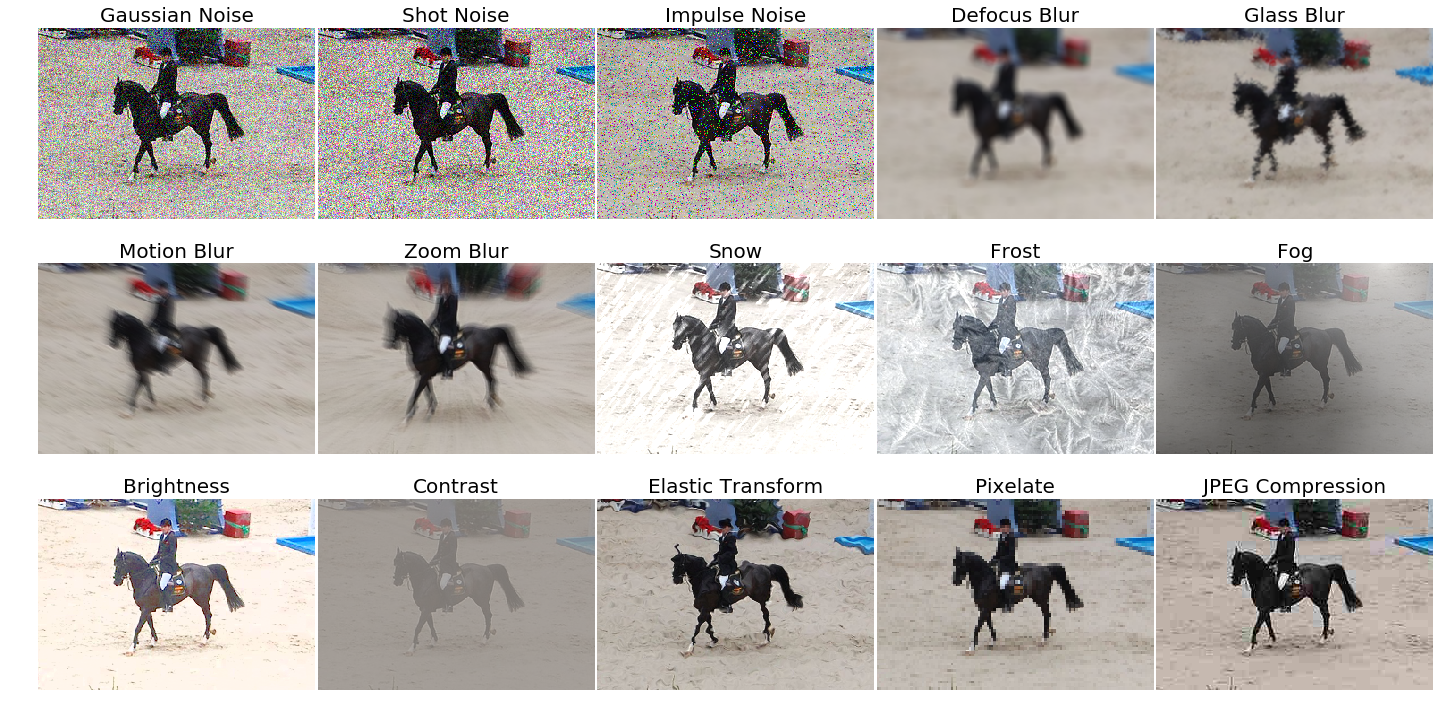

In this paper we establish rigorous benchmarks for image classifier robustness. Our first benchmark, ImageNet-C, standardizes and expands the corruption robustness topic, while showing which classifiers are preferable in safety-critical applications. Unlike recent robustness research, this benchmark evaluates performance on commonplace corruptions not worst-case adversarial corruptions. We find that there are negligible changes in relative corruption robustness from AlexNet to ResNet classifiers, and we discover ways to enhance corruption robustness. Then we propose a new dataset called Icons-50 which opens research on a new kind of robustness, surface variation robustness. With this dataset we evaluate the frailty of classifiers on new styles of known objects and unexpected instances of known classes. We also demonstrate two methods that improve surface variation robustness. Together our benchmarks may aid future work toward networks that learn fundamental class structure and also robustly generalize.

PDF Abstract

Icons-50

Icons-50

ImageNet

ImageNet