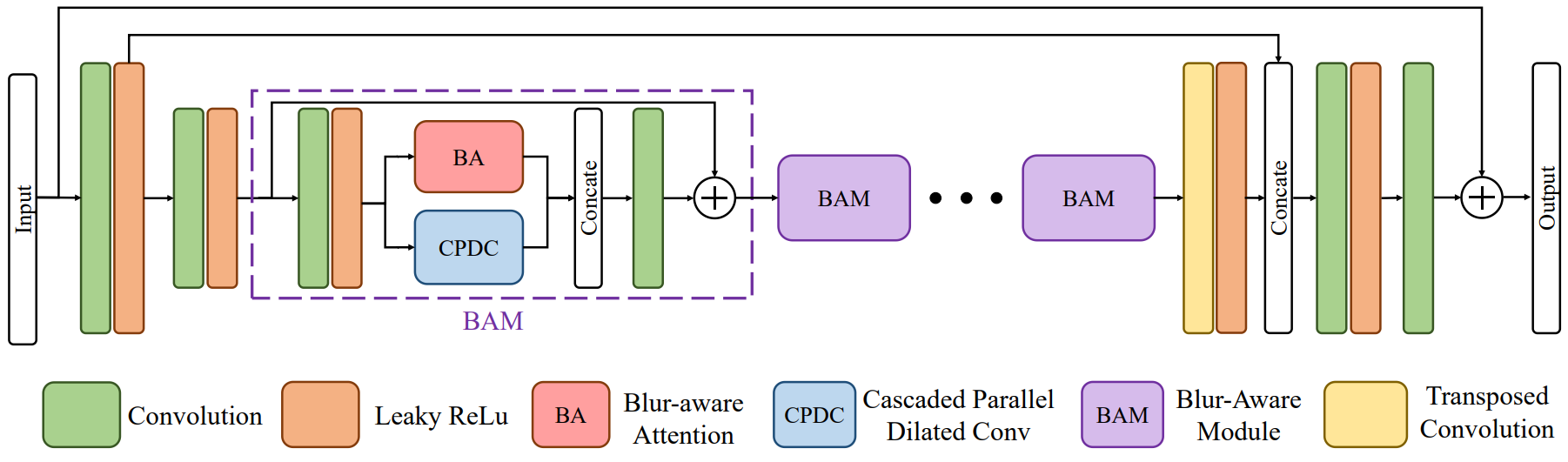

BANet: Blur-aware Attention Networks for Dynamic Scene Deblurring

Image motion blur results from a combination of object motions and camera shakes, and such blurring effect is generally directional and non-uniform. Previous research attempted to solve non-uniform blurs using self-recurrent multiscale, multi-patch, or multi-temporal architectures with self-attention to obtain decent results. However, using self-recurrent frameworks typically lead to a longer inference time, while inter-pixel or inter-channel self-attention may cause excessive memory usage. This paper proposes a Blur-aware Attention Network (BANet), that accomplishes accurate and efficient deblurring via a single forward pass. Our BANet utilizes region-based self-attention with multi-kernel strip pooling to disentangle blur patterns of different magnitudes and orientations and cascaded parallel dilated convolution to aggregate multi-scale content features. Extensive experimental results on the GoPro and RealBlur benchmarks demonstrate that the proposed BANet performs favorably against the state-of-the-arts in blurred image restoration and can provide deblurred results in real-time.

PDF Abstract

GoPro

GoPro

Real Blur Dataset

Real Blur Dataset